GenAI in Development: Boardroom vs Trenches

On the great divide between internet hype and messy reality

Anyone even casually following tech news (or ANY news, really) has heard how AI is making software development more efficient. Claims about 10x or 100x efficiency gains are not unheard of.

In fact, if you listen to AI Lab CEOs, they’re not just preaching improved developer efficiency - they’re talking about making software developers entirely obsolete, imminently. In short: AI will write all the software. According to Dario Amodei, AI might be able to write 90% of the software as soon as 3-6 months from now - which, given the prediction was made in March, puts it somewhere by the end of 2025. Sounds like your stereotypical Silicon Valley CEO’s or investor's wet dream - the former can’t wait to eliminate unreliable wetware from the equation; the latter realized that fortune to be made in selling shovels. So much so, in fact, that when I speak with senior engineering leaders, the challenge of dealing with CEO expecting 2x, 5x or 10x efficiency increase TODAY, becomes a recurring theme.

I remain sceptical about that. It’s one of those situations where everybody agrees on the direction; we only argue about the timing. The timing, though, is important. Go all-in too early, and you may drown in a deluge of AI-generated detritus. Do it too late, and you risk losing your competitive edge. And the truth is - no one really knows.

The realities of a typical software business are quite different from what internet influencers try to paint. Many leaders experience moderate efficiency gains from AI usage in software development. Now, assessing efficiency gains in software development is a tall order already. Various approaches have been tested over decades - starting from counting LOCs to DORA / SPACE metrics today. I am confident that a lot of consulting money was made on measuring development productivity along the way, too. The everyday practicalities of most software teams are that they just don’t measure productivity at all. If anything, they rely on loose, gut feeling as an indicator (“we seem to work on these longer than usual“ or “oh, we seem to introduce more bugs recently”).

Even with the gut-feeling approach, though, it seems that for most organizations, the typical AI-efficiency gains are in single-digit percentage numbers. This is slowly being backed by more and more research. In fact - and this is quite astounding as for the CEO of the company selling AI tooling - Sundar Pichai even cited “AI making Google engineers 10% more productive”. But it goes even further, recently the METR study made headlines online, with results showing an actual decrease in productivity with AI tooling.

Now, I find it very hard to believe that AI tools actually decrease productivity systematically. I do believe it can be the case in some specific, narrow cases, though. It might also be a case of a transition period - using a new tool and/or approach often leads to a temporary efficiency drop before people become fluent with the new ways of working. And besides, even a single-digit (or low two-digit) efficiency increase is worth billions of dollars in the industry. And that’s a conservative estimate!

However, the order-of-magnitude rift between online claims and the realities of software teams is stark.

Here are my thoughts on why I think that’s the case.

First of all, as with any gold rush, you can make shitloads of money by selling shovels. Most companies do that with exorbitant claims about the benefits they will bring. These claims, most of the time, either lack empirical substantiation or hold true only in narrowly defined contexts (e.g. synthetic benchmarks). We’ve seen this story before with public cloud, and especially with the alleged savings it was supposed to bring.

Second, at least as it is in mid-2025, most of these claims focus on the code-writing phase of the SDLC cycle. Typically, these are activities like code generation, debugging, code explanation, or brainstorming. The reality, however, is that for many organizations, writing code (or working with code in general) is only a part of the software engineering work. Depending on the source, it may be between 33% and 50% of the entire engineering time. And perhaps naturally, as the organization and/or seniority of the engineer grows, the pure code-writing phase shrinks. So even if the code writing phase has been improved by 2x, the end result might be that overall net productivity growth might be much less impressive 1.2x.

Third, many online claims come from small companies and startups - unsurprisingly, as they are typically the first ones to venture into uncharted territories. After all, agility is their key strategic advantage over larger incumbents. And at least partially because these companies are more relaxed about security or legal risks (in some cases, more relaxed is a euphemism for not giving a single fuck about these matters). Meanwhile, some of their larger competitors haven’t even managed to get Copilot approved by their legal or security teams (the opposite side of the spectrum).

Finally, and building on the previous point, startup codebases are typically neither large nor burdened by years of obscure changes driven by a tangled web of evolving requirements. Perhaps even more importantly, they are not being used by long-standing customers who depend on layers of osbscure logic up over years (often tailored to specific, idiosyncratic use cases). Customers, mind you, who will be very unpleased when the vibe-coded app starts to break.

Garry Tan said some time ago that for their recent batch AI is writing 95% of the code. It is quite jarring, but I tend to believe Garry.

Which leads us to the key difference I am seeing. Namely, AI tools are borderline absolutely fantastic for some specific cases. Some of these cases are:

working on the greenfield projects, which are completely or nearly completely separated from the rest of the organization's codebase (at least up to a point when these projects become too complex)

building throwaway POCs (even if they are not thrown away afterwards, which is the case more often than not)

using technology (e.g. programming language, libraries, or services) that you are not intimately familiar with.

They are good at other cases, too (e.g. brainstorming or being faster Google), but they excel at the above in the context of software engineering.

Guess what - most of the Y-Combinator batch are pre-product-market-fit companies - all they do from software engineering is write POC / MVP, and the last thing they (should) care about at that stage is long-lasting code quality/readability.

But here’s the thing — most tech leaders don’t work in greenfield settings or build throwaway POCs. As surprising as it may be, a lot of devs don’t work at a hot SV startup or big tech - but rather in some utility provider, midwestern logistics company, or maybe some financial institution. More often than not, they’re knee-deep in brownfield legacy systems.

As it is in mid-2025, AI tools are not overly good with such codebases. One thing is that these codebases often have aged code, with many custom abstraction layers (in other words, out-of-distribution data), separating it from any open source libraries they might use (codebase that is likely in-distribution for the given LLM model). The other thing is that these codebases tend to be sizable. It is not uncommon for these projects to be in the range of hundreds of thousands to millions of LOCs. And while context windows are steadily growing — with models like Gemini 2.5 Pro hitting 1M tokens — that alone doesn’t solve the large codebase problem.

The unfortunate reality is that extending the context window (like in the case of analysing a big codebase) often leads to problems like reduced attention focus or even accidental context-poisoning. You may experience this while working in agentic-mode in Cursor, while trying to provide an increasing amount of information to LLM while debugging a nasty bug, and at some point, realizing that the model suggestions degrade over time.

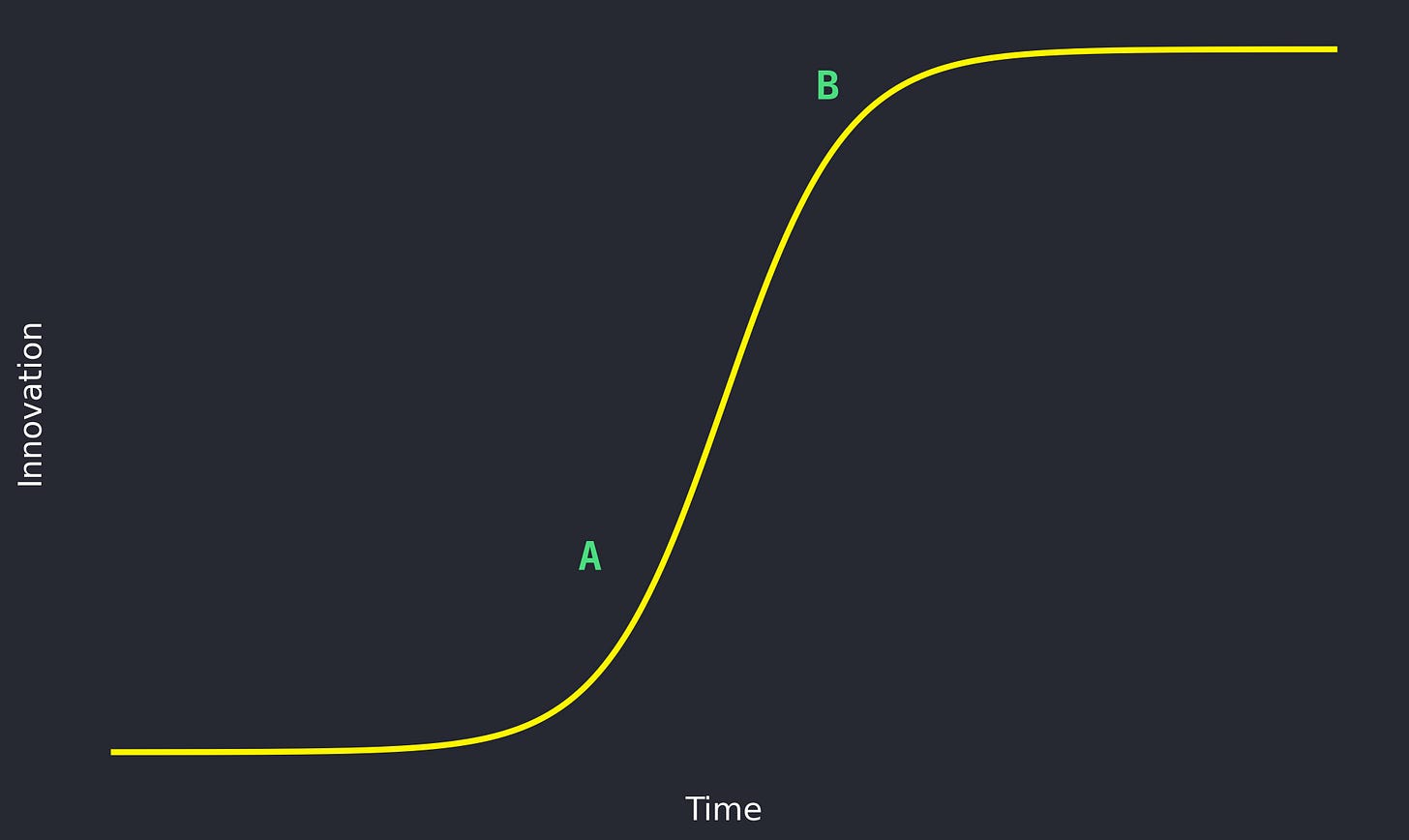

If GenAI development follows S-curve - which is typical for many new inventions that become widespread - we may expect interesting things.

The classic example is smartphones, which experienced massive advancement and innovation in technology between 2010 and 2020. However, it is, hard to deny that the technology has stagnated recently, barely anyone is excited about new iPhone launches these days, and the technological improvements have become mostly incremental.

It is not outside the realm of possibility that it will take way longer for AI to work effectively with brownfield codebase than most people anticipate. @dhh had a great anecdote on his recent Lex podcast about that. (The presentation he mentions is “Web Design: The First Hundred Years with Maciej Ceglowski” from 2014; great presentation by the way).

In other words, it is difficult to say at which point of the S-curve we are currently at (A or B, above).

When it comes to software engineering specifically, I’d say we are starting to see incremental improvements already. Recent releases are definitely less exciting than the ones from 2023-2024. Some feel like almost backward steps 🤷. But it is worth being open-minded - for instance, GPT-5 is allegedly just around the corner.

So, where does it put us?

Real-world productivity gains are, as it is now, overhyped in real production code bases. If you are struggling with marrying LLMs with your messy legacy system, you are not behind. The whole industry is struggling with it.

It is not too late to experiment - and experiment you should because even if the gains present a sobering contrast to the prevailing hype narrative, they remain very much real!

And there are definitely levels to this game - the ability to work effectively with LLMs, even (or especially) with legacy codebases, is a skill that can be levelled up to unlock additional gains. For example, proper code compartmentalization seems to help (alas, it often is an elusive goal in legacy codebases). Heavier investment in company-wide cursor rules has also shown promising results, etc.

And as crazy predictions go: Who knows - maybe LLMs will suck with large legacy codebases for some more time, which will lead us to a situation where it is more practical to rebuild a system (with AI!), instead of refactoring/extending an existing codebase. In that case, everything will be greenfield until it isn’t, in which case it will cease to exist.

Until then, keep your shovel sharp.